It is now politically incorrect to refer to the Bush tax cuts as the ‘Bush tax cuts’:

The Congressional Research Service withdrew a report that found no correlation between top tax rates and economic growth after senators raised concerns.

NYTimes: Nonpartisan Tax Report Withdrawn After G.O.P. Protest

- Posted using BlogPress from my iPad

Welcome!

I know you have many choices to support your view of reality; thanks for choosing shut-it-down. (See my first post for the etymology.)

Friday, November 2, 2012

Monday, October 29, 2012

The wisdom of (polling) crowds?

Interesting (23 Oct.) essay by Nate Silver on his FiveThirtyEight blog on The Virtues and Vices of Election Prediction Markets.

As of this writing, a week prior to the election, Nate Silver's FiveThirtyEight forecast puts Obama's odds of winning at 75% and Romney's at 25%.

The electronic markets favor Obama, but give lower odds:

Republican/right wing pundits have their own reality, e.g.,

Samuel Popkin at The American Prospect, in his review of Silver's new book, The Signal and the Noise: Why So Many Predictions Fail—But Some Don’t (Penguin Press) writes...

- Posted using BlogPress from my iPad

As of this writing, a week prior to the election, Nate Silver's FiveThirtyEight forecast puts Obama's odds of winning at 75% and Romney's at 25%.

The electronic markets favor Obama, but give lower odds:

- The Iowa Electronic Markets put odds of Obama winning at 62% and Romney at 38%.

- Intrade also puts the Obama odds at 62%.

Republican/right wing pundits have their own reality, e.g.,

- (22 Oct) Josh Jordan at The National Review thinks Nate Silver's analysis is flawed, and that he is basically a partisan hack who got lucky (and/or inside information) in 2008.

Whatever the explanation, Silver’s strong showing in the 2008 election, coupled with his consistent predictions that Obama will win in November, has given Democrats a reason for optimism. While there is nothing wrong with trying to make sense of the polls, it should be noted that Nate Silver is openly rooting for Obama, and it shows in the way he forecasts the election.

On September 30, leading into the debates, Silver gave Obama an 85 percent chance and predicted an Electoral College count of 320–218. Today, the margins have narrowed — but Silver still gives Obama a 67 percent chance and an Electoral College lead of 288–250, which has led many to wonder if he has observed the same movement to Romney over the past three weeks as everyone else has. [He has, but doesn't see momentum.] Given the fact that an incumbent president is stuck at 47 percent nationwide, the odds might not be in Obama’s favor, and they certainly aren’t in his favor by a 67–33 margin. - The Weekly Standard (29 Oct) reports this New Projection of Election Results: Romney 52, Obama 47

The bipartisan Battleground Poll, in its “vote election model,” is projecting that Mitt Romney will defeat President Obama 52 percent to 47 percent.

Samuel Popkin at The American Prospect, in his review of Silver's new book, The Signal and the Noise: Why So Many Predictions Fail—But Some Don’t (Penguin Press) writes...

Based on the numbers, Silvers thinks Obama has an edge in the Electoral College, and in his most recent forecast over the weekend he predicts that there are a little less than three chances in four that Obama will win. This is not the same thing as being certain. Silver knows what he doesn’t know, and this should be more reassuring to us than forecasters who use fewer sources of data yet appear more certain.In another week we'll know who's been fooled!

In the late Nobel laureate Richard Feynman’s appendix to the Rogers Commission report on the Space Shuttle Challenger accident, Feynman’s concluding words were “Nature cannot be fooled.” Feynman would have applauded The Signal and the Noise, for Silver shows in area after area that while experts cannot fool nature, it’s all too easy for them to fool themselves and deceive others. No technical or statistical expertise is needed to appreciate this book. The more you know or think you know, though, the more you will learn about the limits of expertise and the work we must do to minimize unavoidable biases. The Challenger that couldn’t explode followed the Titanic that couldn’t sink. No institution, no theory, no data set is too big to fail.

- Posted using BlogPress from my iPad

Thursday, August 16, 2012

Mayberry, R.I.P.

Frank Rich uses the occasion of Andy Griffith's death as the launching pad for an essay, Mayberry R.I.P. in New York magazine on American "exceptionalism" and the cottage industry of declinist literature.

In reality, The Andy Griffith Show didn’t transcend the deep divides of its time. It merely ignored them. “Local control” of Mayberry saw to it that this southern town would remain lily-white for all eight years of its fictive existence rather than submit to any civil-rights laws that would require the federal government’s “top-down management” to enforce. Nor was television always so simple back then. Just seven months before The Andy Griffith Show’s 1960 debut on CBS, the same network broadcast an episode of The Twilight Zone, “The Monsters Are Due on Maple Street," in which the placid all-American denizens of an (all-white) suburban enclave turn into a bloodthirsty mob hunting down any aliens in human camouflage that might have infiltrated the neighborhood. As the show’s creator and narrator, Rod Serling, makes clear in his parable’s concluding homily (“Prejudices can kill …”), the hovering aliens who threatened to drive Americans to civil unrest and self-destruction at the dawn of the Kennedy era were not necessarily from outer space.

The wave of nostalgia for Andy Griffith’s Mayberry and for the vanished halcyon America it supposedly enshrined says more about the frazzled state of America in 2012 and our congenital historical amnesia than it does about the reality of America in 1960. The eulogists’ sentimental juxtapositions of then and now were foreordained. If there’s one battle cry that unites our divided populace, it’s that the country has gone to hell and that almost any modern era, with the possible exception of the Great Depression, is superior in civic grace, selfless patriotism, and can-do capitalistic spunk to our present nadir. For nearly four years now—since the crash of ’08 and the accompanying ascent of Barack Obama—America has been in full decline panic. Books by public intellectuals, pundits, and politicians heralding our imminent collapse have been one of the few reliable growth industries in hard times.

Monday, August 6, 2012

"...the metaness of it all"

The Mars Reconnaissance Orbiter took this photo of the Mars Curiosity rover during its descent.

Alexis Madrigal writes in The Atlantic

- Posted using BlogPress from my iPhone

Alexis Madrigal writes in The Atlantic

Details aside, I find the coordination of our robots on Mars to be more mindblowing than even the amazing descent of the rover. This is a nascent exploration ecosystem. And the metaness of it all -- humans tweeting about watching a humanmade satellite watch a humanmade rover descend on Mars -- feels profound, not forced. The layers of effort and decades of organization necessary to make this feat possible provide the grit and structure for the easy triumphal narrative. 10 years of stale sweat, bad coffee, and thankless work for seven minutes of glory in the long story of knowing where we live.

- Posted using BlogPress from my iPhone

Tuesday, July 24, 2012

“Is it possible I invented the whole damn Internet?”

As Timothy B. Lee at Ars Technica puts it, in response to a stunning piece of political correctness (chock full of factual incorrectness), "The Wall Street Journal has earned a reputation for producing in-depth and meticulously fact-checked news coverage. Unfortunately, it doesn't always apply that same high standard of quality to their editorial page."

Opening with SciAm blogger Michael Moyer, in a post titled Yes, Government Researchers Really Did Invent the Internet

Alex Pareene at Salon writes

Steve Wildstrom sums it up:

- Posted using BlogPress from my iPhone

Opening with SciAm blogger Michael Moyer, in a post titled Yes, Government Researchers Really Did Invent the Internet

“It’s an urban legend that the government launched the Internet,” writes Gordon Crovitz in an opinion piece in today’s Wall Street Journal. Most histories cite the Pentagon-backed ARPANet as the Internet’s immediate predecessor, but that view undersells the importance of research conducted at Xerox PARC labs in the 1970s, claims Crovitz. In fact, Crovitz implies that, if anything, government intervention gummed up the natural process of laissez faire innovation.

...

But Crovitz’s story is based on a profound misunderstanding of not only history, but technology. Most egregiously, Crovitz seems to confuse the Internet—at heart, a set of protocols designed to allow far-flung computer networks to communicate with one another—with Ethernet, a protocol for connecting nearby computers into a local network. (Robert Metcalfe, a researcher at Xerox PARC who co-invented the Ethernet protocol, today tweeted tongue-in-cheek “Is it possible I invented the whole damn Internet?”)

...

Other commenters, including Timothy B. Lee at Ars Technica and veteran technology reporter Steve Wildstrom, have noted that Crovitz’s misunderstandings run deep. He also manages to confuse the World Wide Web (incidentally, invented by Tim Berners Lee while working at CERN, a government-funded research laboratory) with hyperlinks, and an internet—a link between two computers—with THE Internet.

But perhaps the most damning rebuttal comes from Michael Hiltzik, the author [of] “Dealers of Lightning,” a history of Xerox PARC that Crovitz uses as his main source for material. “While I’m gratified in a sense that he cites my book,” writes Hiltzik, “it’s my duty to point out that he’s wrong. My book bolsters, not contradicts, the argument that the Internet had its roots in the ARPANet, a government project.”

Alex Pareene at Salon writes

So, basically, the government had its grubby innovation-suppressing paws all over the creation of the Internet, a fact reinforced by Michael Hiltzik’s response to Gordon Crovitz in the L.A. Times. (Hiltzik is the author of a book cited by Crovitz in his column.) Basically Crovitz got everything wrong:Crovitz then points out that TCP/IP, the fundamental communications protocol of the Internet, was invented by Vinton Cerf (though he fails to mention Cerf’s partner, Robert Kahn). He points out that Tim Berners-Lee “gets credit for hyperlinks.”And finally, Ethernet was “by no means a precursor of the Internet.” So: Government created the Internet, just like we thought before. Even the ornery libertarian-leaning geeks of Slashdot concede the point.

Lots of problems here. Cerf and Kahn did develop TCP/IP — on a government contract! And Berners-Lee doesn’t get credit for hyperlinks–that belongs to Doug Engelbart of Stanford Research Institute, who showed them off in a legendary 1968 demo you can see here. Berners-Lee invented the World Wide Web–and he did so at CERN, a European government consortium.

(One more fun fact: Al Gore genuinely did have a formative role in creating the Internet! The 1991 bill that funded, among lots of other important-for-the-development-of-the-Internet things, the creation of Mosaic, the mother of all web browsers, was known as "the Gore Bill." And Mosaic was created by a government-funded unit at the public University of Illinois at Urbana-Champaign. The government invented the Internet.)

People familiar with the history of the Internet will, obviously, barely notice this attempt at partisan revisionism. But I am very confident that “The Government Had Nothing To Do With Inventing The Internet That Is a Liberal Lie” will become one of those wonderful myths.... You’ll be seeing this one pop up — as common knowledge, probably — in Corner posts and Fox News hits for years to come. When some random idiot Republican candidate in 2014 or 2016 makes the rounds on the blogs for claiming that the private sector invented in the Internet, just remember that it all started right here, in the Wall Street Journal.

UPDATE: Oh, lord. Two minutes after publishing I see that Fox’s John Stossel is on board with the new narrative!

Steve Wildstrom sums it up:

The history of the internet is not particularly in dispute and we have the great good fortune that most of the pioneers who made it happen are still with us and able to share their stories. (For example, my video interviews with Cerf and Kahn.) In a nutshell, the internet began as a Defense Dept. research project designed to create a way to facilitate communication among research networks. It was almost entirely the work of government employees and contractors. It was split into military and civilian pieces, the latter run by the National Science Foundation. By the early 1990s, businesses were starting to see commercial possibilities and the private sector began building networks that connected with NSFnet. After initially resisting commercialization, NSF gave in and withdrew from the internet business in 1995, fully privatizing the network.

To paraphrase Yogi Berra, you can look it up in a book–or a web site.

- Posted using BlogPress from my iPhone

Sunday, July 22, 2012

Round up the usual suspects

The Aurora, CO theater shooting unfolded

There will of course be the usual news cycle, but only until our attention wanes and we have another financial industry or celebrity scandal. Gun massacres are simply a recurring and accepted part of the American landscape, a fact captured by cartoonists

Tom Tomorrow (This Modern World):

and Ruben Bolling (Tom the Dancing Bug):

On The Monkey Cage blog, political scientist Patrick Egan argues The Declining Culture of Guns and Violence in the United States

Finally, here is incontrovertible proof of the Founders intent regarding 100-round assault-rifle magazines, based on this previously undiscovered transcript of a June 12, 1787, debate on the Second Amendment at the Constitutional Convention.

- Posted using BlogPress from my iPhone

when a man strode to the front in a multiplex near Denver and opened fire. At least 12 people were killed and 58 wounded, with witnesses describing a scene of claustrophobia, panic and blood. Minutes later, the police arrested James Holmes, 24, in the theater’s parking lot.

There will of course be the usual news cycle, but only until our attention wanes and we have another financial industry or celebrity scandal. Gun massacres are simply a recurring and accepted part of the American landscape, a fact captured by cartoonists

Tom Tomorrow (This Modern World):

I wrote this cartoon after the Gabby Giffords shooting in January of 2011. It remains tragically relevant.

Sigh

and Ruben Bolling (Tom the Dancing Bug):

And of course, here we go again. I did this comic shortly after the Columbine [1999] shootings.

Teen inkblot goes on rampage across U.S.

And after the Virginia Tech shootings in 2007.

On The Monkey Cage blog, political scientist Patrick Egan argues The Declining Culture of Guns and Violence in the United States

The massacre unleashed by James Holmes in Aurora, Colo. shortly after midnight on Friday is a tragedy of national proportions. Like other mass shootings before it—Columbine in 1999 and Virginia Tech in 2007 come to mind—it leaves us desperate for explanations in its wake. There are those who blame our nation’s relative paucity of gun control laws and others decrying the power of the gun lobby. Cultural explanations abound, too. On the right, one Congressman has pinned the blame on long-term national cultural decline. On the left, fingers are pointed at America’s “gun-crazy” culture.

But as pundits and politicians react, they would do well to keep in mind two fundamental trends about violence and guns in America that are going unmentioned in the reporting on Aurora.

First, we are a less violent nation now than we’ve been in over forty years. In 2010, violent crime rates hit a low not seen since 1972; murder rates sunk to levels last experienced during the Kennedy Administration. Our perceptions of our own safety have shifted, as well. In the early 1980s, almost half of Americans told the General Social Survey (GSS) they were “afraid to walk alone at night” in their own neighborhoods; now only one-third feel this way.

...

Thus long-term trends suggest that we are in fact currently experiencing a waning culture of guns and violence in the United States. This is undoubtedly helping to dampen the public’s support for both gun control and the death penalty. There are growing partisan gaps on attitudes regarding the two policies, but enthusiasm for both has declined recently in lockstep with the drop in crime and violence. The total effects of these trends on opinion and policy remain to be seen, but one thing is clear: they defy easy ideological explanation.

Finally, here is incontrovertible proof of the Founders intent regarding 100-round assault-rifle magazines, based on this previously undiscovered transcript of a June 12, 1787, debate on the Second Amendment at the Constitutional Convention.

- Posted using BlogPress from my iPhone

Friday, July 20, 2012

NOAA Releases Report on Extreme Weather Events

From the AAAS Policy Alert (18 July 2012):

NOAA Releases Report on Extreme Weather Events. The July 2012 study [PDF] by the National Oceanic and Atmospheric Administration, "Explaining Extreme Events of 2011 From a Climate Perspective," found that climate change increased the likeliness of the occurrence of recent extreme weather events. For example, scientists concluded that climate change made the 2011 Texas drought 20 times more likely to occur, and Britain's 2011 November heat wave 62 times more likely to occur.

NOAA also announced recently that the 12-month period between July 2011 and June 2012 was the hottest in U.S. recorded history.

- Posted using BlogPress from my iPad

NOAA Releases Report on Extreme Weather Events. The July 2012 study [PDF] by the National Oceanic and Atmospheric Administration, "Explaining Extreme Events of 2011 From a Climate Perspective," found that climate change increased the likeliness of the occurrence of recent extreme weather events. For example, scientists concluded that climate change made the 2011 Texas drought 20 times more likely to occur, and Britain's 2011 November heat wave 62 times more likely to occur.

NOAA also announced recently that the 12-month period between July 2011 and June 2012 was the hottest in U.S. recorded history.

- Posted using BlogPress from my iPad

Friday, July 13, 2012

So papa, how do you like the iPad we got you?

From a German comedy show (but no need to understand the language to get it).

(Thanks to Jesse)

- Posted using BlogPress from my iPad

(Thanks to Jesse)

- Posted using BlogPress from my iPad

Thursday, July 12, 2012

Why There is Something Rather than Nothing

Nothin' from nothin' leaves nothin'

You gotta have somethin'

If you wanna be with me

—BILLY PRESTON

"Nothing From Nothing"

(Billy Preston and Bruce Fisher)

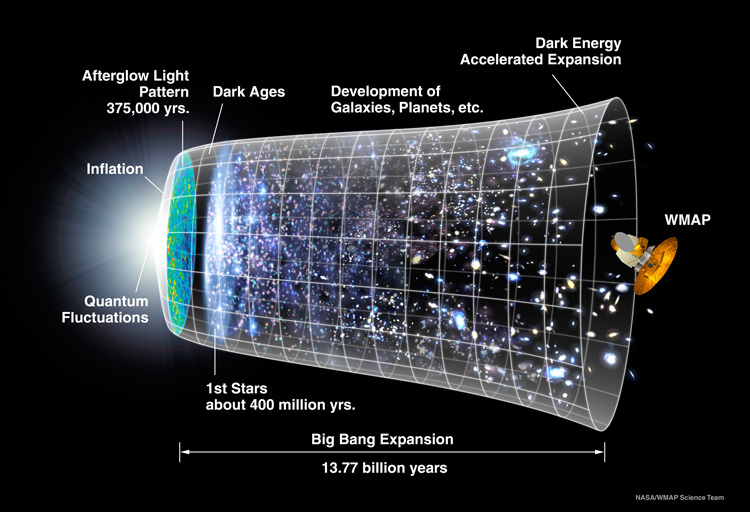

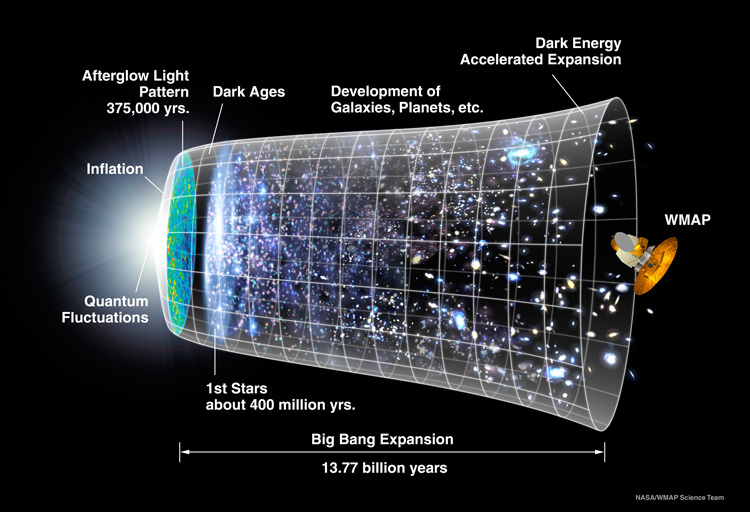

(Tap the above image to link to the NASA page for more image options)

In an eSkeptic column, Michael Shermer summarizes scientific thinking on the question of...

- Posted using BlogPress from my iPad

You gotta have somethin'

If you wanna be with me

—BILLY PRESTON

"Nothing From Nothing"

(Billy Preston and Bruce Fisher)

(Tap the above image to link to the NASA page for more image options)

In an eSkeptic column, Michael Shermer summarizes scientific thinking on the question of...

Why is there something rather than nothing? The question is usually posed by Christian apologists as a rhetorical argument meant to pose as the drop-dead killer case for God that no scientist can possibly answer. Those days are over. Even though scientists are not in agreement on a final answer to the now non-rhetorical question, they are edging closer to providing logical and even potentially empirically testable hypotheses to account for the universe. Here are a dozen possible answers to the question....Nothing is Negligible: Why There is Something Rather than Nothing

In the meantime, while scientists sort out the science to answer the question Why is there something instead of nothing?, in addition to reviewing these dozen answers it is also okay to say “I don’t know” and keep searching. There is no need to turn to supernatural answers just to fulfill an emotional need for explanation. Like nature, the mind abhors a vacuum, but sometimes it is better to admit ignorance than feign certainty about which one knows not. If there is one lesson that the history of science has taught us it is that it is arrogant to think that we now know enough to know that we cannot know. Science is young. Let us have the courage to admit our ignorance and to keep searching for answers to these deepest questions.

- Posted using BlogPress from my iPad

Wednesday, July 11, 2012

The Higgs boson explained with animation

From Open Culture, The Higgs Boson Explained

- Posted using BlogPress from my iPad

Here we have Daniel Whiteson, a physics professor at UC Irvine, giving us a fuller explanation of the Higgs Boson, mercifully using animation to demystify the theory and the LHC experiments that may confirm it sooner or later.

- Posted using BlogPress from my iPad

How long does it take to become a native?

The article Killed by Thousands, Varmint Will Never Quit is interesting...

"I know I'm not supposed to be here, but neither are you."

- Posted using BlogPress from my iPad

Nutria are now believed to be in 17 or more states. They are endemic throughout the Gulf Coast, and there are pockets in Oregon and Washington. Louisiana’s population, once estimated to be the largest at 20 million, has fallen after instituting a bounty program for pelts....but in the animated short Hi! I'm a Nutria by the filmmaker Drew Christie, a rodent living in Washington State defends criticism that he is an invasive species and asks, “How long does it take to become a native?”

"I know I'm not supposed to be here, but neither are you."

- Posted using BlogPress from my iPad

Monday, July 9, 2012

"Plus ça change, plus c'est la même chose."

As prompted by the Vanity Fair article, Microsoft’s Downfall: Inside the Executive E-mails and Cannibalistic Culture That Felled a Tech Giant

"Stack Management"

"Fumbling the Future" *

* For a refresher on the above etymology, I've pulled a few excerpts from the book Fumbling the Future: How Xerox Invented, Then Ignored, the First Personal Computer (1988):

"Stack Management"

Eichenwald’s conversations reveal that a management system known as “stack ranking”—a program that forces every unit to declare a certain percentage of employees as top performers, good performers, average, and poor—effectively crippled Microsoft’s ability to innovate. “Every current and former Microsoft employee I interviewed—every one—cited stack ranking as the most destructive process inside of Microsoft, something that drove out untold numbers of employees,” Eichenwald writes. “If you were on a team of 10 people, you walked in the first day knowing that, no matter how good everyone was, 2 people were going to get a great review, 7 were going to get mediocre reviews, and 1 was going to get a terrible review,” says a former software developer. “It leads to employees focusing on competing with each other rather than competing with other companies.”

"Fumbling the Future" *

According to Eichenwald, Microsoft had a prototype e-reader ready to go in 1998, but when the technology group presented it to Bill Gates he promptly gave it a thumbs-down, saying it wasn’t right for Microsoft. “He didn’t like the user interface, because it didn’t look like Windows,” a programmer involved in the project recalls.

“Windows was the god—everything had to work with Windows,” Stone tells Eichenwald. “Ideas about mobile computing with a user experience that was cleaner than with a P.C. were deemed unimportant by a few powerful people in that division, and they managed to kill the effort.”

* For a refresher on the above etymology, I've pulled a few excerpts from the book Fumbling the Future: How Xerox Invented, Then Ignored, the First Personal Computer (1988):

Thursday, July 5, 2012

Lonesome George (1912? - 2012)

The New York Times reports A Giant Tortoise’s Death Gives Extinction a Face

- Posted using BlogPress from my iPad

The world took notice when Lonesome George died, marking the end of his subspecies. But for researchers and workers in the Galápagos Islands, his death also takes a personal tone.

George’s death was a singular moment, representing the extinction of a creature right before human eyes — not dinosaurs wiped out eons ago or animals consigned to oblivion by hunters who assumed there would always be more. That thought was expressed at the shops and restaurants that are the research center’s neighbors on Charles Darwin Avenue.

“We have witnessed extinction,” said a blackboard in front of one business. “Hopefully we will learn from it.”

- Posted using BlogPress from my iPad

"Thanks, nature"

CERN has announced discovery (at a five sigma level — or 1 in 3.5 million probability of chance) of the Higgs boson, based on "a breakneck analysis of about 800 trillion proton-proton collisions over the last two years."

Dennis Overbye writes in The New York Times:

From Overbye's blog post, What in the World Is a Higgs Boson?

- Posted using BlogPress from my iPad

Dennis Overbye writes in The New York Times:

Physicists had been icing the Champagne ever since last December. Two teams of about 3,000 physicists each — one named Atlas, led by Fabiola Gianotti, and the other CMS, led by Dr. Incandela — operate giant detectors in the collider, sorting the debris from the primordial fireballs left after proton collisions.

Last winter, they both reported hints of the same particle. They were not able, however, to rule out the possibility that it was a statistical fluke. Since then, the collider has more than doubled the number of collisions it has recorded.

The results announced Wednesday capped two weeks of feverish speculation and Internet buzz as the physicists, who had been sworn to secrecy, did a breakneck analysis of about 800 trillion proton-proton collisions over the last two years.

Up until last weekend, physicists at the agency were saying that they themselves did not know what the outcome would be. Expectations soared when it was learned that the five surviving originators of the Higgs boson theory had been invited to the CERN news conference.

The December signal was no fluke, the scientists said Wednesday. The new particle has a mass of about 125.3 billion electron volts, as measured by the CMS group, and 126 billion according to Atlas. Both groups said that the likelihood that their signal was a result of a chance fluctuation was less than one chance in 3.5 million, “five sigma,” which is the gold standard in physics for a discovery.

On that basis, Dr. Heuer said that he had decided only on Tuesday afternoon to call the Higgs result a “discovery.”

He said, “I know the science, and as director general I can stick out my neck.”

Dr. Incandela’s and Dr. Gianotti’s presentations were repeatedly interrupted by applause as they showed slide after slide of data presented in graphs with bumps rising like mountains from the sea.

Dr. Gianotti noted that the mass of the putative Higgs, apparently one of the heaviest subatomic particles, made it easy to study its many behaviors. “Thanks, nature,” she said.

From Overbye's blog post, What in the World Is a Higgs Boson?

Last winter Lisa Randall, a prominent Harvard theorist, sat down with me in a sort of virtual sense to talk about this quest. Everything she said is still true.

Q.

In 1993, the United States Congress canceled a larger American collider, the superconducting super collider, which would have been bigger than the CERN machine. Would it have found the Higgs particle years ago?

A.

Yes, if it had gone according to schedule. And it would have been able to find things that weren't a simple Higgs boson, too. The Large Hadron Collider can do such searches as well, but with its lower energy the work is more challenging and will require more time.

- Posted using BlogPress from my iPad

Tuesday, June 26, 2012

SCOTUS

It's an even-to-good bet that when the Court's decision regarding the Affordable Care Act (ACA, a.k.a. Obamacare) is (likely) announced this week that it will overturn at least the insurance mandate (a.k.a. "make us eat broccoli"). Many expect a 5-4 opinion that will break down as follows:

Uphold: Kagan, Sotomayor, Ginsburg, Breyer, and possibly Kennedy

Overturn: Roberts, Alito, Scalia, Thomas, and probably Kennedy

There's spirited debate over the extent to which the Court has become politicized. According to arguments cited in the Wikipedia article Supreme_Court_of_the_United_States our "preconceptions" concerning the Court may be wrong:

On the other hand, Ezra Klein blogs Of course the Supreme Court is Political:

In that New Yorker piece, Unpopular Mandate, Klein writes:

- Posted using BlogPress from my iPad

Uphold: Kagan, Sotomayor, Ginsburg, Breyer, and possibly Kennedy

Overturn: Roberts, Alito, Scalia, Thomas, and probably Kennedy

There's spirited debate over the extent to which the Court has become politicized. According to arguments cited in the Wikipedia article Supreme_Court_of_the_United_States our "preconceptions" concerning the Court may be wrong:

In an article in SCOTUSblog,[89] Tom Goldstein argues that the popular view of the Supreme Court as sharply divided along ideological lines and each side pushing an agenda at every turn is "in significant part a caricature designed to fit certain preconceptions." He points out that in the 2009 term, almost half the cases were decided unanimously, and only about 20% decided by a 5-to-4 vote; barely one in ten cases involved the narrow liberal/conservative divide (fewer if the cases where Sotomayor recused herself are not included). He also points to several cases that seem to fly against the popular conception of the ideological lines of the Court.[90][91] Goldstein argues that the large number of pro-criminal-defendant summary dismissals (usually cases where the justices decide that the lower courts significantly misapplied precedent and reverse the case without briefing or argument) are an illustration that the conservative justices have not been aggressively ideological. Likewise, Goldstein states that the critique that the liberal justices are more likely to invalidate acts of Congress, show inadequate deference to the political process, and be disrespectful of precedent, also lacks merit: Thomas has most often called for overruling prior precedent (even if long standing) that he views as having been wrongly decided, and during the 2009 term Scalia and Thomas voted most often to invalidate legislation.

On the other hand, Ezra Klein blogs Of course the Supreme Court is Political:

While I was reporting out my New Yorker piece, I spoke with Akhil Reid Amar, a leading constitutional law scholar at Yale, who thinks that a 5-4 party-line vote against the mandate would be shattering to the court’s reputation for being above politics. “I’ve only mispredicted one big Supreme Court case in the last 20 years,” he told me. “That was Bush v. Gore. And I was able to internalize that by saying they only had a few minutes to think about it and they leapt to the wrong conclusion. If they decide this by 5-4, then yes, it’s disheartening to me, because my life was a fraud. Here I was, in my silly little office, thinking law mattered, and it really didn’t. What mattered was politics, money, party, and party loyalty.”

In that New Yorker piece, Unpopular Mandate, Klein writes:

On March 23, 2010, the day that President Obama signed the Affordable Care Act into law, fourteen state attorneys general filed suit against the law’s requirement that most Americans purchase health insurance, on the ground that it was unconstitutional. It was hard to find a law professor in the country who took them seriously. “The argument about constitutionality is, if not frivolous, close to it,” Sanford Levinson, a University of Texas law-school professor, told the McClatchy newspapers. Erwin Chemerinsky, the dean of the law school at the University of California at Irvine, told the Times, “There is no case law, post 1937, that would support an individual’s right not to buy health care if the government wants to mandate it.” Orin Kerr, a George Washington University professor who had clerked for Justice Anthony Kennedy, said, “There is a less than one-per-cent chance that the courts will invalidate the individual mandate.” Today, as the Supreme Court prepares to hand down its decision on the law, Kerr puts the chance that it will overturn the mandate—almost certainly on a party-line vote—at closer to “fifty-fifty.” The Republicans have made the individual mandate the element most likely to undo the President’s health-care law. The irony is that the Democrats adopted it in the first place because they thought that it would help them secure conservative support. It had, after all, been at the heart of Republican health-care reforms for two decades.Klein goes on to discuss the health care policy flip-flop in the broader context of the psychological concept of motivated reasoning,

The mandate made its political début in a 1989 Heritage Foundation brief titled “Assuring Affordable Health Care for All Americans,” as a counterpoint to the single-payer system and the employer mandate, which were favored in Democratic circles. In the brief, Stuart Butler, the foundation’s health-care expert, argued, “Many states now require passengers in automobiles to wear seat-belts for their own protection. Many others require anybody driving a car to have liability insurance. But neither the federal government nor any state requires all households to protect themselves from the potentially catastrophic costs of a serious accident or illness. Under the Heritage plan, there would be such a requirement.” The mandate made its first legislative appearance in 1993, in the Health Equity and Access Reform Today Act—the Republicans’ alternative to President Clinton’s health-reform bill—which was sponsored by John Chafee, of Rhode Island, and co-sponsored by eighteen Republicans, including Bob Dole, who was then the Senate Minority Leader.

which Dan Kahan, a professor of law and psychology at Yale, defines as “when a person is conforming their assessments of information to some interest or goal that is independent of accuracy”—an interest or goal such as remaining a well-regarded member of his political party, or winning the next election, or even just winning an argument.In other words, as functioning human beings, most of us are using our intellect not in the pursuit of Truth, but rather to win arguments.

- Posted using BlogPress from my iPad

Friday, June 22, 2012

Alan Turing

From the BBC site:

Other essays include

The codebreaker who saved 'millions of lives' by Prof Jack Copeland

Jack Copeland is professor of philosophy at the University of Canterbury, New Zealand. He has also built the Turing Archive for the History of Computing, an extensive online resource.

Is he really the father of computing? by Prof Simon Lavington

Simon Lavington is the author of Alan Turing and his Contemporaries: Building the World's First Computers and a former professor of computer science at the University of Essex.

The experiment that shaped artificial intelligence by Prof Noel Sharkey

Gay codebreaker's defiance keeps memory alive by Andrew Hodges

Andrew Hodges is a tutorial fellow in mathematics at Wadham College, University of Oxford, and author of Alan Turing: the enigma.

- Posted using BlogPress from my iPad

The life and achievements of Alan Turing - the mathematician, codebreaker, computer pioneer, artificial intelligence theoretician, and gay/cultural icon - are being celebrated to mark what would have been his 100th birthday on 23 June.

To mark the occasion the BBC has commissioned a series of essays to run across the week, starting with this overview of Turing's legacy by Vint Cerf.

His is a story of astounding highs and devastating lows. A story of a genius whose mathematical insights helped save thousands of lives, yet who was unable to save himself from social condemnation, with tragic results. Ultimately though, it's a story of a legacy that laid the foundations for the modern computer age.

In 1936, while at King's College, Cambridge, Turing published a seminal paper On Computable Numbers which introduced two key concepts - "algorithms" and "computing machines" - that continue to play a central role in our industry today.

Computing before computers

He is remembered most vividly for his work on cryptanalysis at Bletchley Park during World War II, developing in 1940 the so-called electro-mechanical Bombe used to determine the correct rotor and plugboard settings of the German Enigma encryptor to decrypt intercepted messages.

It would be hard to overstate the importance of this work for the Allies in their conduct of the war.

After the war, Turing worked on the design of of the Automatic Computing Engine (Ace) at the National Physical Laboratory (NPL) and in 1946, he delivered a paper on the design of a stored program computer.

His work was contemporary with another giant in computer science, John von Neumann, who worked on the Electronic Discrete Variable Automatic Computer (Edvac).

Ace and Edvac were binary machines and both broke new conceptual ground with the notion of a program stored in memory that drove the operation of the machine.

Storing a program in the computer's memory meant that the program could alter itself, opening up remarkable new computing vistas.

Other essays include

The codebreaker who saved 'millions of lives' by Prof Jack Copeland

Jack Copeland is professor of philosophy at the University of Canterbury, New Zealand. He has also built the Turing Archive for the History of Computing, an extensive online resource.

Is he really the father of computing? by Prof Simon Lavington

Simon Lavington is the author of Alan Turing and his Contemporaries: Building the World's First Computers and a former professor of computer science at the University of Essex.

The experiment that shaped artificial intelligence by Prof Noel Sharkey

Gay codebreaker's defiance keeps memory alive by Andrew Hodges

Andrew Hodges is a tutorial fellow in mathematics at Wadham College, University of Oxford, and author of Alan Turing: the enigma.

- Posted using BlogPress from my iPad

Saturday, February 25, 2012

Mike Kaplan's reminiscences about Stanley Kubrick

Reminiscences about Stanley Kubrick written by Mike Kaplan, a veteran film executive who was Kubrick's marketing man for his film A Clockwork Orange, having also worked extensively on the release of Kubrick's 2001: A Space Odyssey. A Clockwork Orange opened nationally 40 years ago this month.

(Via a link Twitter link from Roger Ebert?)

- Posted using BlogPress from my iPad

- How Stanley Kubrick Invented the Modern Box-Office Report (By Accident)

- Inside the First Screening of A Clockwork Orange

- How Stanley Kubrick Shot His Own Newsweek Cover

- How Stanley Kubrick Kept His Eye on the Budget, Down to the Orange Juice

(Via a link Twitter link from Roger Ebert?)

- Posted using BlogPress from my iPad

Wednesday, February 15, 2012

"The percentage of fine people is increasing"

From The Thomas Edison Papers at Rutgers:

"I believe the world is slowly getting better. The percentage of fine people is increasing. Man has not yet overcome his malignant environment, but men as animals will improve.

-Thomas Edison. “Mr. Edison’s Views of Life and Work,” Review of Reviews, Jan 1932, vol. 85. Page 31.

From the AAAS News Archives:

- Posted using BlogPress from my iPad

"I believe the world is slowly getting better. The percentage of fine people is increasing. Man has not yet overcome his malignant environment, but men as animals will improve.

-Thomas Edison. “Mr. Edison’s Views of Life and Work,” Review of Reviews, Jan 1932, vol. 85. Page 31.

From the AAAS News Archives:

Businesses that would like to increase their creativity and “innovate like Edison” should look back at the practices of one of history’s most productive inventors, author and business consultant Sarah Miller Caldicott said at on 8 December at the Fourth Annual AAAS-Hitachi Lecture on Science & Society, organized by the AAAS Center of Science, Policy and Society Programs.

A great-grandniece of Thomas Alva Edison, and a descendant of other inventors, Caldicott said she grew up hearing stories about how her relatives worked through problems and dealt with business successes and failures. After studying Edison’s papers housed at Rutgers University and other sources about his work, she published her findings in a 2007 book, Innovate Like Edison, which she co-authored with Michael Gelb.

- Posted using BlogPress from my iPad

Saturday, January 21, 2012

The Higgs boson: One page explanations

On 02012.01.06, AAAS Member Central posted a short article (plus an interview with Lisa Randall) by Brian Dodson explaining why the Higgs Boson is important:

The post also linked to a site offering five simple(!?!), one page explanations of the Higgs boson.

- Posted using BlogPress from my iPad

Two recent experiments (ATLAS - A Toroidal Lhc ApparatuS, and CMS - Compact Muon Solenoid) have independently found indications that the Higgs boson may exist (with a mass of about 125 GeV/c2, or roughly 133 times the mass of a hydrogen atom). Although these indications are at about the 2 sigma level of certainty (5 sigma levels are required to claim a discovery), the experimental results suggest that the existence and properties of the Higgs boson should be pinned down during 2012, if all goes well.

Why is finding the Higgs boson so important to the future of high energy physics? The Standard Model (SM) explains the existence of massive particles by the Higgs mechanism, in which a spontaneously broken symmetry associated with a scalar field (the Higgs field) results in the appearance of mass. The quantum of the Higgs field is the Higgs boson. It is the last particle predicted by the SM that has still to be discovered experimentally.

The post also linked to a site offering five simple(!?!), one page explanations of the Higgs boson.

In 1993, the UK Science Minister, William Waldegrave, challenged physicists to produce an answer that would fit on one page to the question 'What is the Higgs boson, and why do we want to find it?'

The winning entries taken from Physics World Volume 6 Number 9, were by:

Mary & Ian Butterworth and Doris & Vigdor Teplitz

Roger Cashmore

David Miller

Tom Kibble

Simon Hands

- Posted using BlogPress from my iPad

Sunday, January 15, 2012

The elderly in pictures

In 2009, the UN estimated that there are only about 455,000 centenarians in the world.

Happy At One Hundred: Emotive Portraits of Centenarians

That's how long in chicken years?

Emotive Photographs of Elderly Animals

- Posted using BlogPress from my iPad

Happy At One Hundred: Emotive Portraits of Centenarians

That's how long in chicken years?

Emotive Photographs of Elderly Animals

- Posted using BlogPress from my iPad

Tuesday, November 29, 2011

Don't Ask Don't Tell replaced by Don't Care

At least for the Marines, the issue of gays in the military turns out to be a big yawn. According to an AP report, Top Marine says service embracing gay ban repeal Gen. James Amos, who opposed (see below*) repeal of Don't Ask Don't Tell now says gays in military are non-issue:

* In Dec. 2010 Gen. Amos gave congressional testimony, and later participated in a roundtable discussion with reporters. Here's the CSMonitor article.

- Posted using BlogPress from my iPhone

The apparent absence of angst about gays serving openly in the Marines seemed to confirm Amos' view that the change has been taken in stride, without hurting the war effort.

In the AP interview, he offered an anecdote to make his point. He said that at the annual ball in Washington earlier this month celebrating the birth of the Marine Corps, a female Marine approached Amos's wife, Bonnie, and introduced herself and her lesbian partner.According to a WSJ article, Sergeant Major Michael Barrett, recently selected to be the senior enlisted adviser to Marine Corps Commandant Gen. James Amos, joined Gen. Amos in a tour of Marine bases in the Pacific in June:

"Bonnie just looked at them and said, 'Happy birthday ball. This is great. Nice to meet you,'" Amos said. "That is happening throughout the Marine Corps."

Sgt. Maj. Barrett also tackled questions on the repeal of “Don’t Ask, Don’t Tell,” the military’s ban on gays serving openly in uniform. The Department of Defense is preparing to implement repeal, and Sgt. Maj. Barrett addressed that issue directly.

“Article 1, Section 8 of the Constitution is pretty simple,” he told a group of Marines at a base in South Korea. “It says, ‘Raise an army.’ It says absolutely nothing about race, color, creed, sexual orientation.

“You all joined for a reason: to serve,” he continued. “To protect our nation, right?”

“Yes, sergeant major,” Marines replied.

“How dare we, then, exclude a group of people who want to do the same thing you do right now, something that is honorable and noble?” Sgt. Maj. Barrett continued, raising his voice just a notch. “Right?”

* In Dec. 2010 Gen. Amos gave congressional testimony, and later participated in a roundtable discussion with reporters. Here's the CSMonitor article.

- Posted using BlogPress from my iPhone

Thursday, October 27, 2011

Risk vs. Uncertainty in Politics

Nate Silver writes this interesting take on "risk" vs. "uncertainty" in politics.

- Posted using BlogPress from my iPhone

Well, I don’t know what [Cain] should do. This gets at something of the distinction that the economist Frank H. Knight once made between risk and uncertainty. To boil Mr. Knight’s complicated thesis down into a sentence: risk, essentially, is measurable whereas uncertainty is not measurable.

In Mr. Cain’s case, I think we are dealing with an instance where there is considerable uncertainty. Not only do I not know how I would go about estimating the likelihood that Mr. Cain will win the Republican nomination — I’m not sure that there is a good way to do so at all.

But I do know what an analyst should not do: he should not use terms like “never” and “no chance” when applied to Mr. Cain’s chances of winning the nomination, as many analysts have.

- Posted using BlogPress from my iPhone

Tuesday, October 4, 2011

Hispanics Raptured in small AL town

Disappearance opens up job opportunities for real Americans in poultry processing plants.

http://t.co/7XAkeDar

- Posted using BlogPress from my iPhone

http://t.co/7XAkeDar

- Posted using BlogPress from my iPhone

Subscribe to:

Posts (Atom)